Mastering Efficient Iteration Techniques for Pandas DataFrames

'Flat is better than nested'

Table of contents

Recently, I undertook a data manipulation task that involved using DataFrames for Data Engineering purposes. Whether you're a Data Engineer, Data Scientist, or a professional working with data, you've likely used the DataFrame data structure from the Pandas library for various tasks. While there are multiple ways to iterate through DataFrame values, some methods are more efficient than others.

In this article, I intend to delve into these various approaches, so let's begin!

What is a DataFrame?

A DataFrame is a tabular data structure in Pandas that efficiently stores and allows access to data of various data types in rows and columns.

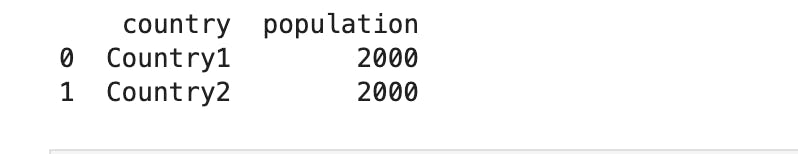

In this article, I'll be working with a DataFrame that includes sample countries and their population data. The primary goal is to double the population of each country within this DataFrame and add this new value in a column called double_population. To assess the performance of various methods employed for this task, I'll be conducting runtime comparisons using the timeit IPython magic command.

Here is the population_df DataFrame:

Different Iteration Methods

Using

.apply()This method involves applying a function to the values within a DataFrame. While it aligns with one of Python's guiding principles, which emphasizes simplicity and avoiding excessive nesting, it's important to note that this approach may not be the most efficient one.

This code snippet illustrates doubling the population size using

.apply():population_df['double_population'] = population_df.apply( lambda row: row['population'] * 2, axis=1 )Here is the time it takes for it to run and execute the code:

804 µs ± 19.9 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)Using

.iterrows().iterrows() returns a tuple consisting of the row index and a series of the row values in the DataFrame.

This code snippet illustrates doubling the population size using

.iterrows():new_population = [] for index, row in population_df.iterrows(): double_pop = row['population'] * 2 new_population.append(double_pop) population_df['double_population'] = new_populationHere is the time it takes for the for-loop to run and execute the code within:

461 µs ± 30.8 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)Can I do better? Maybe. Let's move on to the next method!

Using

.itertuples()I like to call

.itertuples()a better alternative to.iterrows().This is because.itertuples()returns a named tuple which is a much lighter object than the object returned by.iterrows().This code snippet illustrates doubling the population size using

.itertuples():new_population = [] for row in population_df.itertuples(): double_pop = row.population * 2 new_population.append(double_pop) population_df['double_population'] = new_populationHere is the time it takes for the for-loop to run and execute the code within:

404 µs ± 9.48 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)This shows that it is slightly faster than using

.iterrows(). Can I do better?Using

.iloc.ilocuses indexed-based referencing to access values in a DataFrame. If I want to access the population value ofCountry1in thepopulation_dfDataFrame and store the result inpopulation_val, I can do so using this .iloc syntax:population_val = population_df.iloc[0, 1]This code snippet illustrates doubling the population size using

.iloc:new_population = [] for index in range(len(population_df)): double_pop = population_df.iloc[index, 1] * 2 new_population.append(double_pop) population_df['double_population'] = new_populationThe new column gets added to the DataFrame. However, here is the time it takes for the for-loop to run and execute the code within:

138 µs ± 24.9 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)This is a significant runtime reduction but I can reduce it further. Let's move on to the next method!

Using

.to_numpy()Pandas is built on top of the Numpy package. Utilizing the broadcast feature of Numpy Arrays will significantly reduce the runtime thereby making the code more efficient.

This code snippet illustrates this:

population_df['double_population'] = population_df['population'].to_numpy() * 2Here is the time it takes for the for-loop to run and execute the code within:

109 µs ± 14.3 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

It is interesting how the runtime reduced from 804 microseconds to 109 microseconds due to the minor improvements made along the way. This might not seem like a lot for small datasets but when dealing with large datasets, these improvements can make a difference.

Hope this helps you to write more efficient iteration code for DataFrames !